Outbound Interface in Oracle Apps R12

Integrating Oracle E-Business Suite (EBS) with other systems is a common need for businesses today. Outbound interface are key here, allowing you to export validated data from Oracle Apps R12 to other platforms in formats like JSON, CSV, or XML.

This article will walk you through designing outbound interface in Oracle Apps R12. You’ll learn at four different ways to implement them, complete with real-world examples, and share best practices to help you build strong, scalable integrations.

Table of Contents

Why Outbound Interfaces Matter

Key Design Principles of Outbound Interface

Outbound Interfac Design with Real-World Examples

Business Event Based Outbound Interface

Concurrent Program Based Batch Outbound Interface

Oracle Alert Based Outbound Interface

Database Trigger-Based Outbound Interface

Why Outbound Interfaces Matter

Outbound interfaces automate how Oracle EBS data exports to external systems like CRMs, WMSs, 3PL, or data lakes. When designed well, they significantly improve:

- Operational efficiency: Automating these exports cuts down on manual work.

- Data accuracy: Built-in validations ensure the data sent out is clean and consistent.

- Timeliness: Interfaces can operate instantly or on a set schedule.

- Auditability: Logging and monitoring features let you track exactly what was sent and when.

A good design also minimizes impact on your system’s performance, handles errors smoothly, and allows for easy recovery if something goes wrong.

Key Design Principles of Outbound Interface

You need to keep the below core principles in mind during designing of your outbound interfaces in Oracle Apps R12:

- Decouple extraction and delivery: Use staging(temporary) tables to separate the data extraction process from its delivery.

- Schedule and monitor: Use concurrent programs for scheduling and monitoring your interfaces.

- Capture changes efficiently: Track data changes using triggers, business events, or alerts.

- Deliver in modern formats: Whenever possible, prefer JSON flat files over older formats.

- Log every transaction: Maintain a comprehensive log for auditing and reprocessing purposes.

Outbound Interfac Design with Real-World Examples

Here are four common ways to design outbound interfaces in Oracle Apps R12, each with a specific scenario and steps for implementation.

1. Business Event-Based Outbound Interface

Business event based outbound interface is perfect when external systems need real-time, asynchronous updates with minimal delay.

- Use Case: Real-Time Customer Updates to CRM Whenever a customer account is created or updated in Oracle (specifically, HZ_CUST_ACCOUNTS), the external CRM needs to receive the updated record in JSON format.

- Design Steps:

- Subscribe to the business event:

- For customer Creation, you’d subscribe to oracle.apps.ar.hz.Organization.create

- For customer updates, you’d subscribe to oracle.apps.ar.hz.Organization.update.

- Create a custom PL/SQL subscriber: This code will write the necessary details to a staging table (XX_CUST_OUT_STG) and queue the record for further processing.

- A concurrent program/OIC api then picks up these records from the staging table, validates them, formats the JSON using UTL_FILE, and transfers it to the CRM via SFTP.

- Subscribe to the business event:

2. Concurrent Program-Based Batch Outbound Interface

Concurrent program based outbound interface is ideal for periodic batch updates when you’re dealing with a significant volume of data.

- Use Case: Nightly Item Master Export to WMS Every night at 3 AM, all active inventory items need to be sent to the warehouse management system.

- Design Steps:

- Develop a PL/SQL-based concurrent program:

- This program queries MTL_SYSTEM_ITEMS_B for active items.

- It inserts the data into a temporary table (XX_ITEM_OUT_TMP) for processing and auditing.

- It then validates the data and generates a JSON flat file using UTL_FILE.

- Finally, it places the file in an outbound directory for SFTP transfer.

- Schedule the concurrent program using the Oracle Apps Request Scheduler.

- Develop a PL/SQL-based concurrent program:

3. Alert-Based Outbound Interface

Use alert base outbound interface when the interface is rule-based, infrequent, and doesn’t require a dedicated batch job.

- Use Case: Compliance Alert on Large AP Invoices Whenever an AP invoice greater than $50,000 is approved, an external compliance monitoring system needs to be notified.

- Design Steps:

- Define a Periodic Alert in Oracle Alerts on AP_INVOICES_ALL for invoices that meet the criteria.

- The alert action inserts the relevant data into a staging table (XX_INV_COMPL_STG).

- An optional concurrent program can then format a JSON/CSV file and send it via email or FTP.

4. Database Trigger-Based Outbound Interface

Database Trigger-Based Outbound Interface approach is best when you need immediate capture of low-volume, high-priority data changes.

- Use Case: Real-Time Order Capture to Data Lake When a sales order (OE_ORDER_HEADERS_ALL) is booked, key order details need to be captured and pushed to the enterprise data lake.

- Design Steps:

- Add a row-level trigger on OE_ORDER_HEADERS_ALL for INSERT operations.

- This trigger writes the inserted data to a staging table (XX_ORDER_DL_STG).

- A background concurrent program periodically picks up these staged records, validates them, and generates a JSON flat file for transfer.

Outbound Interface Approach Comparison

Here’s a quick comparison of the four approaches:

| Approach | Best For |

| Business Event | Real-time, asynchronous updates |

| Concurrent Program | High-volume, scheduled batch exports |

| Alert | Rule-based, low-frequency triggers |

| Databse Trigger | Immediate, row-level data change capture |

To build robust and maintainable interfaces, always:

- Always use staging and temporary tables for intermediate data.

- Implement strong validation and error logging.

- Prefer JSON over CSV/XML unless a different format is specifically required.

- Use Oracle Alerts or Workflow notifications for monitoring your interfaces.

- Keep clear audit trails for troubleshooting and compliance.

Conclusion

Outbound interfaces in Oracle Apps R12 are essential for seamless enterprise integrations. The key is choosing the right mechanism – whether it’s business events, concurrent programs, alerts, or database triggers – based on your specific business needs, the frequency of updates, and the criticality of the data.

By combining Oracle’s native features with modern data formats like JSON, and by adopting disciplined staging and monitoring practices, you can build interfaces that are not only robust and scalable but also easy to maintain.

What are some of the data exchange challenges you’re currently facing in your Oracle EBS environment?

Appendix: Detailed Implementation Examples

This appendix outlines the components you’d typically find for a complete implementation of each outbound interface approach, including configuration, staging table definitions, and basic test scenarios.

1. Business Event-Based Outbound Interface: Real-Time Customer Updates to CRM

A. Configuration Elements

- Identify the business event(oracle.apps.ar.hz.Organization.create or oracle.apps.ar.hz.Organization.update) that will trigger after create/update the customer.

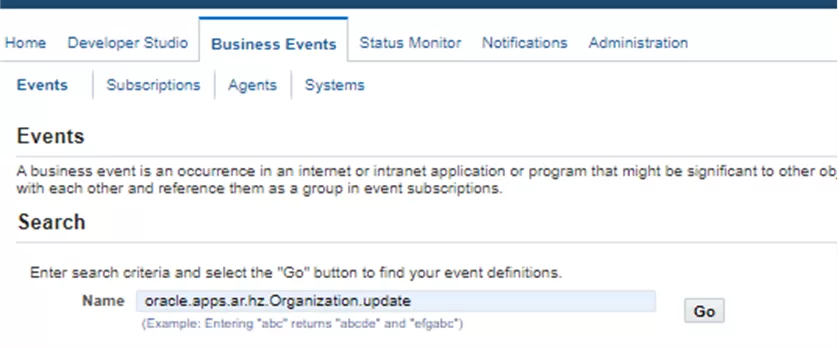

- Navigate to System Administrator –> Workflow–>Administrator Workflow –>Business Events –> Events

- Enter the business event name and press “Go”

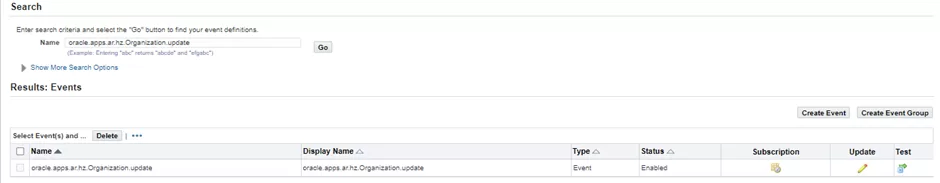

- Now click on “Subscription” to create the subscription

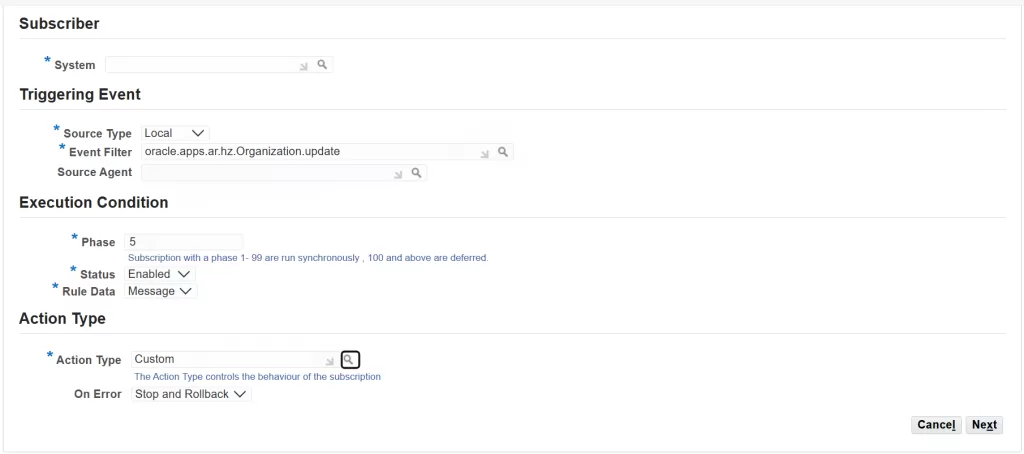

- Enter the below important fields and press “Next”

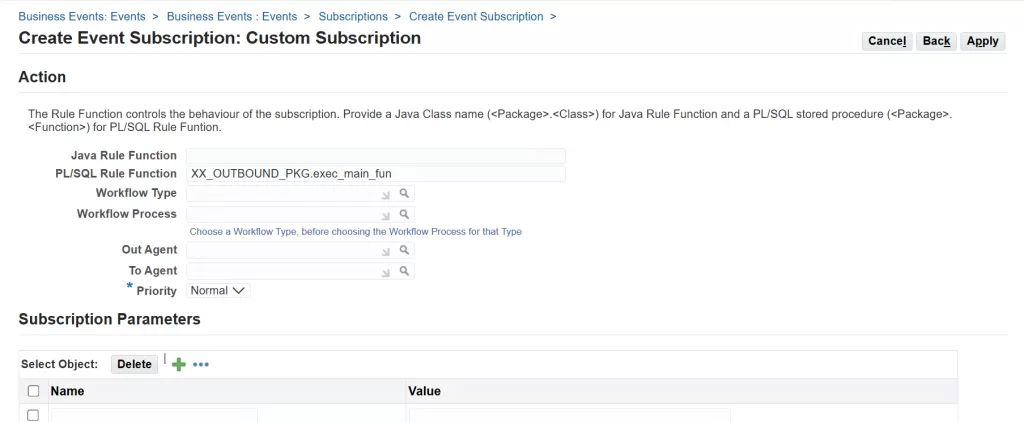

- Enter the PL/SQL Procedure as PL/SQL Rule Function and press apply

- SFTP Connection Details: Host, Port, Username, Authentication (e.g., SSH Key path configured in Oracle Wallet if using UTL_TCP for SFTP, or a pre-configured integration with a middleware).

- JSON Structure Definition: A clear specification of the expected JSON output format for customer data (e.g., {“customer_id”: “123”, “customer_name”: “ABC Corp”, “address”: “…”, “status”: “Active”}).

B. Staging Table (XX_CUST_OUT_STG)

- Purpose: To temporarily hold customer data pushed from the business event before processing and delivery.

- Table Structure (Example DDL)

CREATE TABLE XX_CUST_OUT_STG

(STAGING_ID NUMBER GENERATED BY DEFAULT ON NULL AS IDENTITY, CUSTOMER_ID NUMBER NOT NULL,

CUSTOMER_NAME VARCHAR2(240),

ACCOUNT_NUMBER VARCHAR2(30),

STATUS VARCHAR2(1),

LAST_UPDATE_DATE DATE,

PROCESS_STATUS VARCHAR2(20) DEFAULT 'N', -- N, S, E

ERROR ERROR_MESSAGE VARCHAR2(4000),

JSON_PAYLOAD CLOB, -- To store the full JSON

CREATED_BY NUMBER,

CREATION_DATE DATE,

CONSTRAINT PK_XX_CUST_OUT_STG PRIMARY KEY (STAGING_ID) );C. Custom PL/SQL Procedure for Business Event

PL/SQL function for Extract customers data

PROCEDURE extract_customers (p_party_id IN NUMBER)

IS

CURSOR cur_customers (p_party_id IN NUMBER)

IS

SELECT hca.account_number,

hp.party_id,

hp.party_name,

hp.address1,

hp.city,

hp.state,

hp.postal_code,

hp.person_first_name,

hp.person_last_name,

hp.email_address,

hl.location_code agent_number,

hca.primary_salesrep_id

FROM hz_parties hp,

hz_cust_accounts hca,

hz_party_sites hps,

qp_list_headers qlh,

hz_cust_acct_sites_all hcas,

hz_cust_site_uses_all hcsu,

po_location_associations_all pla,

hr_locations hl

WHERE hp.party_type = 'ORGANIZATION'

AND hp.status = 'A'

AND hca.party_id = hp.party_id

AND hca.status = 'A'

AND hca.price_list_id = qlh.list_header_id

AND qlh.name = 'WMS Customers'

AND hcas.status = 'A'

AND hps.party_id = hp.party_id

AND hcas.cust_account_id = hca.cust_account_id

AND hcas.party_site_id = hps.party_site_id

AND hcsu.cust_acct_site_id = hcas.cust_acct_site_id

AND pla.site_use_id = hcsu.site_use_id

AND hcas.org_id = hcsu.org_id

AND pla.org_id = hcas.org_id

AND pla.customer_id = hcas.cust_account_id

AND hl.location_id = pla.location_id

AND hcsu.site_use_code = 'SHIP_TO'

AND hcsu.primary_flag = 'Y'

AND hps.status = 'A'

AND hcas.status = 'A'

AND hcsu.status = 'A'

AND ( ( p_party_id IS NULL

AND ( ( hp.last_update_date >=

SYSDATE - 1 / 144

AND hp.creation_date < TRUNC (SYSDATE))

OR hp.creation_date >= SYSDATE - 1 / 144))

OR (p_party_id = 1 AND 1 = 1)

OR hp.party_id = p_party_id)

ORDER BY hca.account_number;

CURSOR cur_stag_customers (p_party_id IN NUMBER)

IS

SELECT party_id,

account_number,

party_name,

address1,

city,

state,

postal_code,

person_first_name,

person_last_name,

email_address,

agent_number,

error_code,

error_message,

created_by,

creation_date,

last_updated_by,

last_update_date,

last_update_login

FROM XX_HZ_CUSTOMERS_STG

WHERE party_id = p_party_id;

l_record_count NUMBER := 0;

l_stag_customers cur_stag_customers%ROWTYPE;

l_error_code VARCHAR2 (10);

l_error_msg VARCHAR2 (2000);

l_stag_count NUMBER := 0;

l_party_id NUMBER := p_party_id;

TYPE l_customer_tbl_type IS TABLE OF cur_customers%ROWTYPE

INDEX BY BINARY_INTEGER;

l_customer_tbl l_customer_tbl_type;

BEGIN

OPEN cur_customers (l_party_id);

FETCH cur_customers BULK COLLECT INTO l_customer_tbl;

IF l_customer_tbl.COUNT = 0

THEN

debug ('No Records');

ELSE

l_record_count := l_customer_tbl.COUNT;

debug ('Records: ' || l_record_count);

END IF;

CLOSE cur_customers;

IF l_customer_tbl.COUNT > 0

THEN

FOR i IN l_customer_tbl.FIRST .. l_customer_tbl.LAST

LOOP

OPEN cur_stag_customers (l_customer_tbl (i).party_id);

FETCH cur_stag_customers INTO l_stag_customers;

l_stag_count := cur_stag_customers %ROWCOUNT;

CLOSE cur_stag_customers;

IF l_stag_count = 0

THEN

l_stag_customers.party_id :=

l_customer_tbl (i).party_id;

l_stag_customers.account_number :=

l_customer_tbl (i).account_number;

l_stag_customers.party_name :=

l_customer_tbl (i).party_name;

l_stag_customers.address1 :=

l_customer_tbl (i).address1;

l_stag_customers.city := l_customer_tbl (i).city;

l_stag_customers.state :=

l_customer_tbl (i).state;

l_stag_customers.postal_code :=

l_customer_tbl (i).postal_code;

l_stag_customers.person_first_name :=

l_customer_tbl (i).person_first_name;

l_stag_customers.person_last_name :=

l_customer_tbl (i).person_last_name;

l_stag_customers.email_address :=

l_customer_tbl (i).email_address;

l_stag_customers.agent_number :=

l_customer_tbl (i).agent_number;

l_stag_customers.ERROR_CODE := 'SUCCESS';

change_stag_record (

p_distributor_rec => l_stag_customers,

p_type => 'I',

x_error_code => l_error_code,

x_error_msg => l_error_msg);

ELSE

IF (l_stag_customers.party_name !=

l_customer_tbl (i).party_name)

OR (l_stag_customers.address1 !=

l_customer_tbl (i).address1)

OR (l_stag_customers.postal_code !=

l_customer_tbl (i).postal_code)

THEN

|| l_customer_tbl (i).party_id);

l_stag_customers.party_id :=

l_customer_tbl (i).party_id;

l_stag_customers.account_number :=

l_customer_tbl (i).account_number;

l_stag_customers.party_name :=

l_customer_tbl (i).party_name;

l_stag_customers.address1 :=

l_customer_tbl (i).address1;

l_stag_customers.city :=

l_customer_tbl (i).city;

l_stag_customers.state :=

l_customer_tbl (i).state;

l_stag_customers.postal_code :=

l_customer_tbl (i).postal_code;

l_stag_customers.person_first_name :=

l_customer_tbl (i).person_first_name;

l_stag_customers.person_last_name :=

l_customer_tbl (i).person_last_name;

l_stag_customers.email_address :=

l_customer_tbl (i).email_address;

l_stag_customers.agent_number :=

l_customer_tbl (i).agent_number;

l_stag_customers.ERROR_CODE := 'SUCCESS';

change_stag_record (

p_customer_rec => l_stag_customers,

p_type => 'R',

x_error_code => l_error_code,

x_error_msg => l_error_msg);

END IF;

END IF;

END LOOP;

END IF;

EXCEPTION

WHEN OTHERS

THEN

debug (DBMS_UTILITY.format_error_backtrace || ':' || SQLERRM);

END extract_customers ;Main function for Business Event subscription in Oracle Apps R12

FUNCTION exec_main_fun (p_subscription_guid IN RAW,

p_event IN OUT NOCOPY wf_event_t)

RETURN VARCHARR IS

l_param_list wf_parameter_list_t;

l_param_name VARCHAR2 (240);

l_param_value VARCHAR2 (2000);

l_event_name VARCHAR2 (2000);

l_event_key VARCHAR2 (2000);

l_event_data VARCHAR2 (4000);

l_party_id NUMBER;

BEGIN

l_param_list := p_event.getparameterlist;

l_event_name := p_event.geteventname ();

l_event_key := p_event.geteventkey ();

l_event_data := p_event.geteventdata ();

IF l_param_list IS NOT NULL

THEN

FOR i IN l_param_list.FIRST .. l_param_list.LAST

LOOP

l_param_name := l_param_list (i).getname;

l_param_value := l_param_list (i).getvalue;

END LOOP;

END IF;

IF l_event_name IN ('oracle.apps.ar.hz.Organization.create',

'oracle.apps.ar.hz.Organization.update')

THEN

l_party_id := p_event.getvalueforparameter ('PARTY_ID');

extract_customers (l_party_id);

END IF;

RETURN 'SUCCESS';

EXCEPTION

WHEN OTHERS THEN

RETURN 'ERROR';

END exec_main_fun;You can create the JSON file easily in PL/SQL.

C. Testing Scenarios

- Test Case 1: New Customer Creation

- Action: Create a new customer in Oracle EBS using the Customers form (ARXCUDCI).

- Expected Result: A new record appears in XX_CUST_OUT_STG with PROCESS_STATUS = ‘N’. The concurrent program processes it, and a corresponding JSON file is sent to the CRM via SFTP.

- Test Case 2: Customer Update

- Action: Update an existing customer’s address or status in Oracle EBS.

- Expected Result: Similar to new creation, a record should appear in the staging table and be processed, leading to an updated customer record in the CRM.

- Test Case 3: Error Handling

- Action: Simulate a scenario where the SFTP server is unreachable or the JSON generation fails (e.g., by introducing invalid data in a test environment).

- Expected Result: The PROCESS_STATUS in XX_CUST_OUT_STG should change to ‘E’, and ERROR_MESSAGE should contain relevant details. The concurrent program’s log file should also show the error.

2. Concurrent Program-Based Batch Outbound Interface: Nightly Item Master Export to WMS

Concurrent Program based outbound interface is best for bulk data export from Oracle ebs to external system. Follow the below steps for configuring this in Oracle Apps.

Develop a PL/SQL-based concurrent program that:

- Queries MTL_SYSTEM_ITEMS_B for active items.

- Inserts data into a temporary table (XX_ITEM_OUT_TMP) for processing and audit.

- Validates and generates a JSON flat file with UTL_FILE.

- Places the file in an outbound directory for SFTP.

Schedule the concurrent program via Oracle Apps Request Scheduler.

A. Configuration Elements

- Concurrent Program Definition:

- Executable Type: PL/SQL Stored Procedure

- Executable Name: XX_ITEM_EXPORT_PKG.MAIN_PROCEDURE (Example)

- Program Name: “XX Item Master Export to WMS”

- Parameters: (e.g., P_ORG_ID, P_LAST_RUN_DATE for incremental loads).

- Scheduler: Defined schedule for the concurrent program (e.g., daily at 2 AM).

- Outbound Directory: Oracle UTL_FILE directory alias configured for the file system path where the JSON file will be placed.

- SFTP Command/Script: An OS-level script or a separate Oracle process (e.g., using DBMS_SCHEDULER external job or a middleware agent) to pick up the file from the outbound directory and SFTP it to the WMS.

B. Temporary/Staging Table (XX_ITEM_OUT_TMP)

- Purpose: To temporarily hold item data during the concurrent program run for processing, validation, and auditing before generating the final file. This table is usually truncated at the start of each run or after successful processing.

- Table Structure (Example DDL)

CREATE GLOBAL TEMPORARY TABLE XX_ITEM_OUT_TMP

( INVENTORY_ITEM_ID NUMBER NOT NULL,

ORGANIZATION_ID NUMBER NOT NULL,

ITEM_NUMBER VARCHAR2(40),

DESCRIPTION VARCHAR2(240),

ITEM_STATUS VARCHAR2(1),

PRIMARY_UOM_CODE VARCHAR2(3),

JSON_PAYLOAD_CLOB CLOB, -- Optional: to store individual item JSON if generated item by item

PROCESS_STATUS VARCHAR2(20) DEFAULT 'NEW',

ERROR_MESSAGE VARCHAR2(4000) ) ON COMMIT PRESERVE ROWS; -- Or ON COMMIT DELETE ROWS depending on needC. Testing Scenarios

- Test Case 1: Full Export

- Action: Run the concurrent program manually.

- Expected Result: A JSON file containing all active inventory items is generated in the outbound directory. The SFTP process picks it up and transfers it to the WMS.

- Test Case 2: Incremental Export (if applicable)

- Action: Run the program after some item updates.

- Expected Result: Only updated items are included in the generated JSON file.

- Test Case 3: File Content Validation

- Action: Inspect the generated JSON file for format, completeness, and data accuracy against source data in MTL_SYSTEM_ITEMS_B.

- Expected Result: The JSON file adheres to the defined structure and contains correct item details.

- Test Case 4: Scheduled Run Validation

- Action: Verify the program runs automatically at the scheduled time (e.g., 2 AM).

- Expected Result: The program completes successfully on schedule, and the file is transferred.

3. Alert-Based Outbound Interface: Compliance Alert on Large AP Invoices

A. Configuration Elements

- Oracle Alert Definition:

- Application: Payables

- Alert Name: “AP Invoice Compliance Alert”

- Alert Type: Periodic

- SQL Statement: As given below

- Action: Custom SQL statement to insert into staging table, or call a PL/SQL procedure (XX_COMPL_PKG.INSERT_INVOICE_DATA).

- Concurrent Program (Optional): If a separate program is needed for formatting and sending (e.g., for SFTP/email), define it similar to Approach 2.

SELECT AIA.INVOICE_ID,

AIA.INVOICE_NUM,

AIA.INVOICE_AMOUNT,

AIA.VENDOR_NAME,

AIA.INVOICE_DATE

FROM AP_INVOICES_ALL AIA

WHERE AIA.INVOICE_AMOUNT > 50000

AND AIA.WORKFLOW_APPROVAL_STATUS = 'APPROVED'

AND AIA.INVOICE_DATE >= TRUNC(SYSDATE) - 1 -- New invoices approved in last day

AND NOT EXISTS (SELECT 1 FROM XX_INV_COMPL_STG XICS WHERE XICS.INVOICE_ID = AIA.INVOICE_ID); -- Avoid re-processingB. Staging Table (XX_INV_COMPL_STG)

- Purpose: To store the details of large approved invoices identified by the Oracle Alert.

- Table Structure (Example DDL):

CREATE TABLE XX_INV_COMPL_STG

( STAGING_ID NUMBER GENERATED BY DEFAULT ON NULL AS IDENTITY, INVOICE_ID NUMBER NOT NULL,

INVOICE_NUM VARCHAR2(50),

INVOICE_AMOUNT NUMBER,

VENDOR_NAME VARCHAR2(240),

INVOICE_DATE DATE,

PROCESS_STATUS VARCHAR2(20) DEFAULT 'NEW',

ERROR_MESSAGE VARCHAR2(4000),

LAST_UPDATE_DATE DATE,

CONSTRAINT PK_XX_INV_COMPL_STG PRIMARY KEY (STAGING_ID)

);C. Testing Scenarios

- Test Case 1: Large Invoice Approval

- Action: Create and approve an AP invoice with an amount exceeding $50,000.

- Expected Result: After the periodic alert runs (e.g., every hour), a new record for this invoice appears in XX_INV_COMPL_STG. If a separate concurrent program is used, it should pick up and process this record.

- Test Case 2: Invoice Below Threshold

- Action: Create and approve an AP invoice with an amount less than $50,000.

- Expected Result: No record is created in XX_INV_COMPL_STG.

- Test Case 3: Already Processed Invoice

- Action: Approve an invoice that has already been captured by the alert (e.g., by manually setting its status to ‘NEW’ in staging for re-test, but the alert’s SQL should prevent re-capture normally).

- Expected Result: The alert should not re-insert the same invoice, or the processing logic should handle duplicates.

4. Database Trigger-Based Outbound Interface: Real-Time Material Transactions to Data Lake

A. Configuration Elements

- Database Trigger Definition:

- Trigger Name: XX_INV_TRXN_AIR_TRG(Example: After Insert Record on mtl_material_transactions)

- Timing: BEFORE INSERT ON mtl_material_transactions FOR EACH ROW.

- Trigger Logic: Calls a PL/SQL dbms_scheduler.create_job.

- Data Lake Connection: Details for how data is pushed to the data lake (e.g., SFTP, API endpoint, database link for direct inserts, or a Kafka connector).

B. Staging Table (XX_INV_TRANSACTIONS_STG)

- Purpose: To capture key transactions details immediately upon creation before being processed and pushed to the data lake.

- Table Structure (Example DDL)

CREATE TABLE XX_INV_TRANSACTIONS_STG

( transaction_id NUMBER

, transfer_transaction_id NUMBER

, transaction_date DATE

, transaction_type VARCHAR2(60)

, transaction_reference VARCHAR2(240)

, item VARCHAR2(30)

, transaction_quantity NUMBER

, lot_quantity NUMBER

, lot_number VARCHAR2(60)

, expiration_date DATE

, serial_number VARCHAR2(100)

, organization_code VARCHAR2(10)

, subinventory_code VARCHAR2(30)

, locator VARCHAR2(90)

, trnsfer_org_code VARCHAR2(3)

, transfer_subinventory VARCHAR2(30)

, transfer_locator VARCHAR2(90)

, PROCESS_STATUS VARCHAR2(30)

, error_message VARCHAR2(2000)

, created_by NUMBER

, creation_date DATE

, last_updated_by NUMBER

, last_update_date DATE

, last_update_login NUMBER

);CREATE OR REPLACE TRIGGER apps.XX_INV_TRXN_AIR_TRG

AFTER INSERT

ON inv.mtl_material_transactions

DECLARE

PRAGMA AUTONOMOUS_TRANSACTION;

l_transaction_id NUMBER := 0;

l_inventory_item_id NUMBER := 0;

l_organization_id NUMBER := 81;

BEGIN

--Submit the inv_transactions_pr for processing subinventory transaction as a asynchronous job_action

--So the below procedure can complete after transaction data commited into database

BEGIN

dbms_scheduler.create_job (

job_name => 'XX_TRXN_OUTBOUND_JOB',

job_type => 'PLSQL_BLOCK',

job_action =>

'BEGIN

XX_INV_OUTBOUND_PKG.inv_transactions_pr

( p_transaction_id =>'''||l_transaction_id||

''',p_item_id =>'''||l_inventory_item_id||

''',p_organization_id =>'''||l_organization_id||''' );

END;',

enabled => TRUE,

auto_drop => TRUE,

comments => 'Material Transactions');

EXCEPTION

WHEN OTHERS THEN

NULL;

END;

EXCEPTION

WHEN OTHERS THEN

NULL;

END;

/C. Testing Scenarios

Test Case 1: Create new material transaction

Action: Create a misc receipt

Expected Result: A new record for the transactions appears immediately in XX_INV_TRANSACTIONS_STG with PROCESS_STATUS = ‘NEW’. The background concurrent program should then pick this up and transfer the data to the data lake.

Test Case 2: Trigger Disablement Impact

Action: Temporarily disable the trigger and create a transaction.

Expected Result: No record appears in XX_INV_TRANSACTIONS_STG. This demonstrates the trigger’s role.

Test Case 3: Data Lake Integration Failure

Action: Simulate a failure in pushing data to the data lake (e.g., by misconfiguring the target endpoint).

Pingback: Best Way to Learn GL Interface in Oracle Apps R12

Pingback: Oracle EBS Integration with Third-Party Systems Using APIs